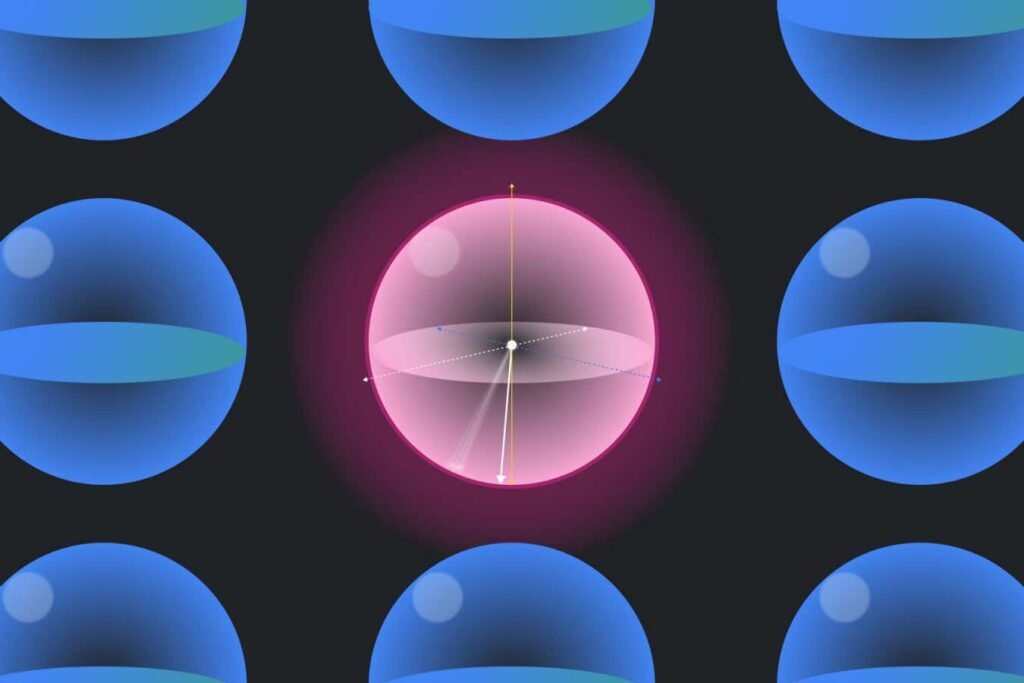

Quantum bits or qubits can be thought of as representing data on a sphere

Google DeepMind

Google DeepMind has developed an AI model that can improve performance quantum computers correcting errors more efficiently than any existing method, bringing these devices one step closer to wider use.

Quantum computers perform calculations in quantum bits or qubits, which are units of information that can store multiple values at the same time, unlike classical bits, which can contain either 0 or 1. These qubits, however, are fragile and error-prone. when disturbed by factors such as ambient heat or a traveling cosmic ray.

To correct these errors, researchers can group qubits together to form a so-called logical qubit, where some qubits are used for computation while others are reserved as such. detect errors tools The information from these final qubits must be interpreted, often through a classical computational algorithm, to then figure out how to correct the errors, in a process called decoding. It’s a difficult task, but it’s closely related to a quantum computer’s overall error-correcting ability, which in turn dictates its ability to perform useful real-world tasks.

now, John Bausch Google DeepMind and its colleagues have developed an artificial intelligence model, called AlphaQubit, that can decode these errors better and faster than any existing algorithm.

“Designing a decoder for quantum error correction code if you’re interested in very, very high accuracy is very non-trivial,” Bausch told reporters at a Nov. 2 press conference. “AlphaQubit learns this high-precision decoding task without humans actively designing the algorithm for it.”

To train AlphaQubit, Bausch and his team used a transformative neural network, the same technology that powers them The Nobel Prize-winning protein prediction AI, AlphaFoldand large language models like ChatGPT to learn how error-detecting qubit data matches qubit errors. First, they trained the model on data from a simulation of what the errors would look like, before tuning it to real-world data. Google’s Sycamore quantum computing chip.

In experiments on a small number of qubits on the Sycamore chip, Bausch and his team found that the AlphaQubit makes 6 percent fewer errors than the so-called next-best algorithm, the tensor network. But tensor networks also get slower as quantum computers get bigger, so they can’t scale to future machines, and AlphaQubit appears to be able to run just as fast, according to simulations, making it a promising tool as these computers grow, Bausch says. . .

“It’s incredibly exciting,” he says Scott Aaronson at the University of Texas at Austin. “It’s been clear for a long time that decoding and correcting errors fast enough, in fault-tolerant quantum computing, would push classical computing to its limits. It’s also become clear that for almost anything classical computers involve optimization or uncertainty, you can now throw in machine learning and they’d do better.”

Topics: